RunAnywhere Launches: The Default Way to Run On-Device AI at Scale

RunAnywhere recently launched!

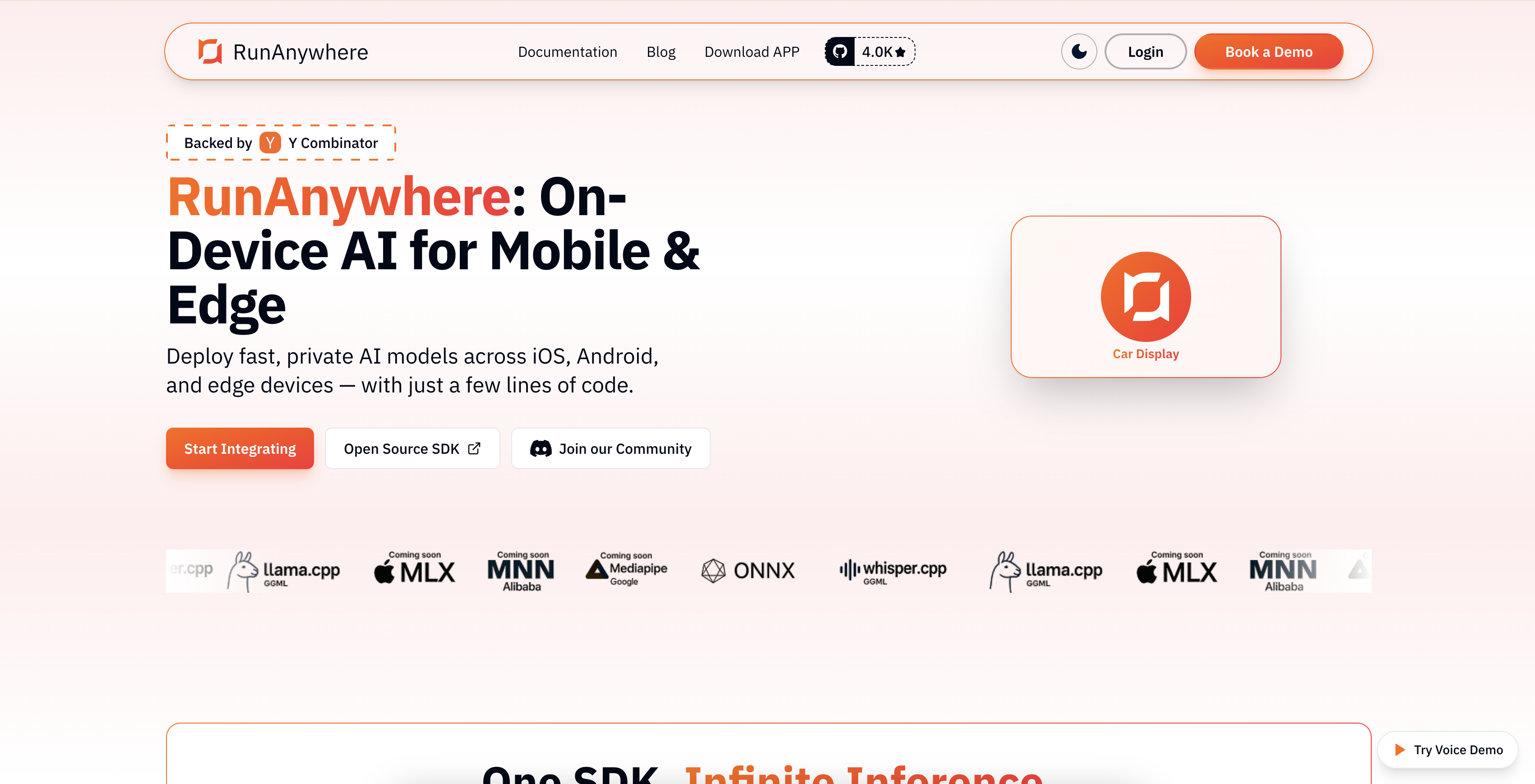

"Deploy fast, private AI models across iOS, Android, and edge devices — with just a few lines of code."

TL;DR: Run Multi-modal AI fully on-device with one SDK and manage model rollouts + policies from a control plane. They are already live and open source with ~3.9k stars on GitHub.

Founded by Sanchit Monga & Shubham Malhotra

The Problem

Edge AI is inevitable — users want instant responses, full privacy (health, finance, personal data), and AI that actually works on planes, subways, or spotty rural connections.

But shipping it today is brutal:

- Every device (iPhone 14 vs Android flagship vs low-end) has wildly different memory, thermal limits, and accelerators.

- Teams waste quarters rebuilding model download/resume/unzip/versioning, lifecycle (load/unload without crashing), multi-engine wrappers (llama.cpp, ONNX, etc.), and cross-platform bindings

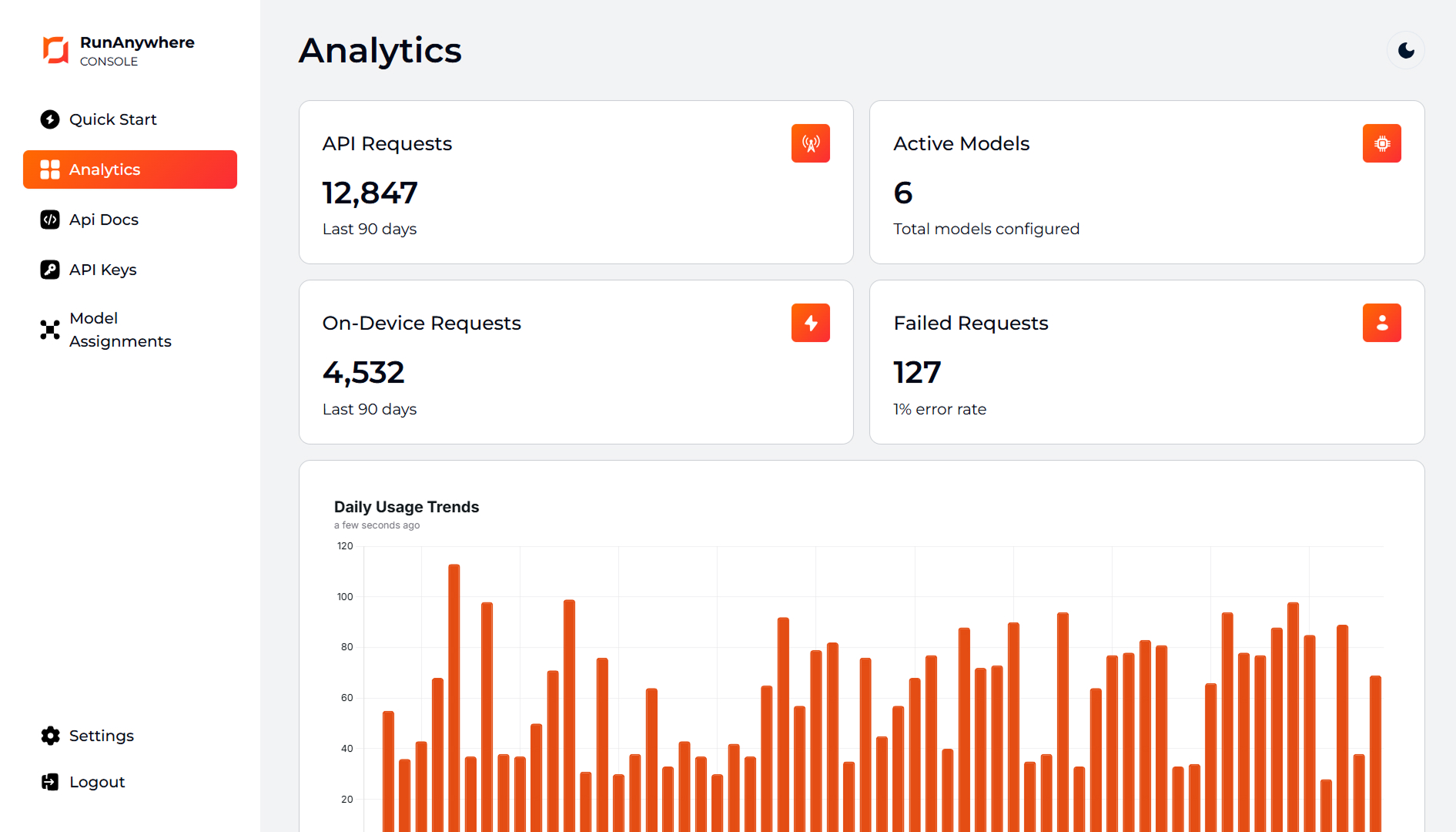

- No real observability — you're blind to fallback rates, per-device perf, crashes tied to model version

Result: most teams either give up on local AI or ship a brittle, hacked-together experience.

The Solution: Complete AI Infrastructure

RunAnywhere isn't just a wrapper around a model. It is a full-stack infrastructure layer for on-device intelligence.

1. The "Boring" Stuff is Built-in They provide a unified API that handles model delivery (downloading with resume support), extraction, and storage management. You don't need to build a file server client inside your app.

2. Multi-Engine & Cross-Platform They abstract away the inference backend. Whether it's llama.cpp or ONNX etc, you use one standard SDK.

- iOS (Swift)

- Android (Kotlin)

- React Native

- Flutter

3. Hybrid Routing (The Control Plane) They believe the future isn't "Local Only"—it's Hybrid. RunAnywhere allows you to define policies: try to run the request locally for zero latency/privacy; if the device is too hot, too old, or the confidence is low, automatically route the request to the cloud.

Quick Links

- OSS SDKs: github.com/RunanywhereAI/runanywhere-sdks (star if it vibes!)

- Full Docs: docs.runanywhere.ai

- Website: runanywhere.ai

Try their demo apps:

The Ask

They are in full execution mode post-launch and hunting design partners + early feedback:

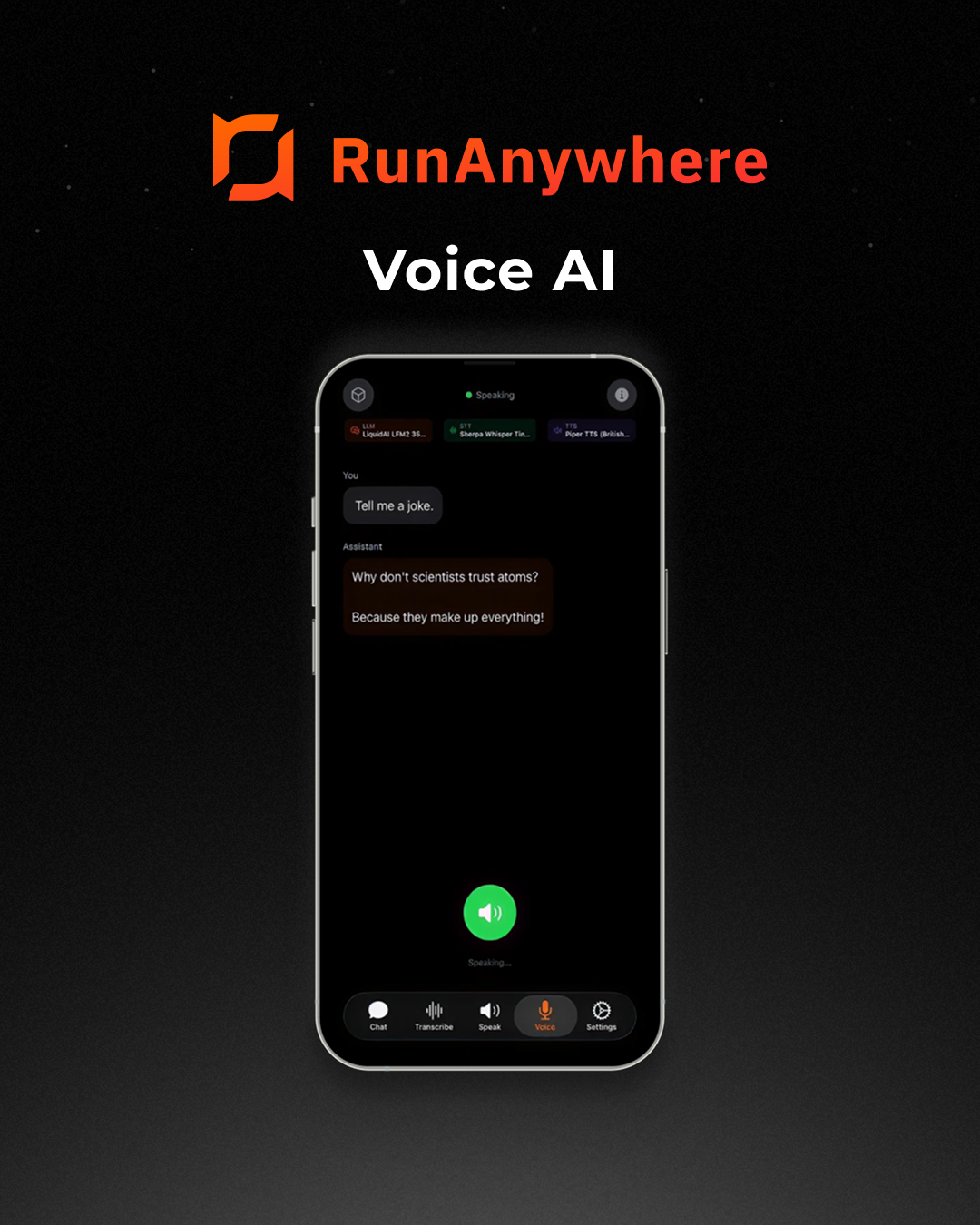

- Building voice AI, offline agents, privacy-sensitive features (health/enterprise/consumer), or hybrid chat in your mobile/edge app?

- Want to eliminate cloud inference costs for repetitive queries while keeping complex ones fast?

- Have a fleet where OTA model updates + observability would save you engineering months?

Learn More & Get In Touch

🌐 Visit www.runanywhere.ai to learn more.

🤝 Drop the founders a line here.

💬 Book a quick call here.

⭐ Give RunAnywhere a star on Github.

👣 Follow RunAnywhere on LinkedIn & X.

Need help with the upcoming tax deadline?

Take the stress out of bookkeeping, taxes, and tax credits with Fondo’s all-in-one accounting platform built for startups. Start saving time and money with our expert-backed solutions.

.png)

.png)

.png)

.png)

.png)

.png)